38 soft labels deep learning

PDF Unsupervised Person Re-Identification by Soft Multilabel Learning in the absence of pairwise labels across disjoint camera views. To overcome this problem, we propose a deep model for the soft multilabel learning for unsupervised RE-ID. The idea is to learn a soft multilabel (real-valued label likeli-hood vector) for each unlabeled person by comparing the unlabeled person with a set of known reference persons ... (PDF) Deep learning with noisy labels: Exploring techniques and ... In this paper, we first review the state-of-the-art in handling label noise in deep learning. Then, we review studies that have dealt with label noise in deep learning for medical image analysis....

Unsupervised deep hashing through learning soft pseudo label for remote ... We design a deep auto-encoder network SPLNet, which can automatically learn soft pseudo-labels and generate a local semantic similarity matrix. The soft pseudo-labels represent the global similarity between inter-cluster RS images, and the local semantic similarity matrix describes the local proximity between intra-cluster RS images. 3.

Soft labels deep learning

Knowledge distillation in deep learning and its applications - PMC Soft labels refers to the output of the teacher model. In case of classification tasks, the soft labels represent the probability distribution among the classes for an input sample. The second category, on the other hand, considers works that distill knowledge from other parts of the teacher model, optionally including the soft labels. A Survey on Deep Learning for Multimodal Data Fusion 01.05.2020 · Multimodal deep learning, presented by Ngiam et al. is the most representative deep learning model based on the stacked autoencoder (SAE) for multimodal data fusion. This deep learning model aims to address two data-fusion problems: cross-modality and shared-modality representational learning. The former aims to capture better single-modality … weijiaheng/Advances-in-Label-Noise-Learning - GitHub Towards Understanding Deep Learning from Noisy Labels with Small-Loss Criterion. Modeling Noisy Hierarchical Types in Fine-Grained Entity Typing: A Content-Based Weighting Approach. Multi-level Generative Models for Partial Label Learning with Non-random Label Noise. ICML 2021. Conference date: Jul 18, 2021 -- Jul 24, 2021 [UCSC REAL Lab] The importance of …

Soft labels deep learning. How to make use of "soft" labels in binary classification - Quora If you choose soft prediction, the output of the model would look like: [0.9, 0.1]; and the output from hard prediction would be "0" (the index) or "fraud". The soft prediction gives you more information about the model's confidence in prediction. The higher the value for the predicted class, the more confident and accurate (in general) the Label-Free Quantification You Can Count On: A Deep Learning Experiment Although it shows excellent correspondence between the two methods, the total number of objects detected with deep learning was around 3% higher. Figure 2: Nuclei detected using fluorescence (left), the corresponding brightfield image (middle), and object shape predicted by deep learning technology (right). Technical Note: Deep learning based MRAC using rapid ultrashort echo ... The tissue labels estimated by deep learning are refined by a conditional random field based correction. The soft tissue labels are further separated into fat and water components using the two-point Dixon method. ... Result: Dice coefficients for air (within the head), soft tissue, and bone labels were 0.76 ± 0.03, 0.96 ± 0.006, and 0.88 ± ... MetaLabelNet: Learning to Generate Soft-Labels from Noisy-Labels - DeepAI Soft-labels are generated from extracted features of data instances, and the mapping function is learned by a single layer perceptron (SLP) network, which is called MetaLabelNet. Following, base classifier is trained by using these generated soft-labels. These iterations are repeated for each batch of training data.

Adversarial Attacks and Defenses in Deep Learning 01.03.2020 · 1. Introduction. A trillion-fold increase in computation power has popularized the usage of deep learning (DL) for handling a variety of machine learning (ML) tasks, such as image classification , natural language processing , and game theory .However, a severe security threat to the existing DL algorithms has been discovered by the research community: … Plant diseases and pests detection based on deep learning: a review 24.02.2021 · Deep learning is a black box, which requires a large number of labelled training samples for end-to-end learning and has poor interpretability. Therefore, how to use the prior knowledge of brain-inspired computing and human-like visual cognitive model to guide the training and learning of the network is also a direction worthy of studying. At ... Deep Soft Error Propagation Modeling Using Graph Attention Network Soft errors are increasing in computer systems due to shrinking feature sizes. Soft errors can induce incorrect outputs, also called silent data corruption (SDC), which raises no warnings in the system and hence is difficult to detect. To prevent SDC effectively, protection techniques require a fine-grained profiling of SDC-prone instructions, which is often obtained by applying machine ... Learning Soft Labels via Meta Learning Learning Soft Labels via Meta Learning View publication Copy Bibtex One-hot labels do not represent soft decision boundaries among concepts, and hence, models trained on them are prone to overfitting. Using soft labels as targets provide regularization, but different soft labels might be optimal at different stages of optimization.

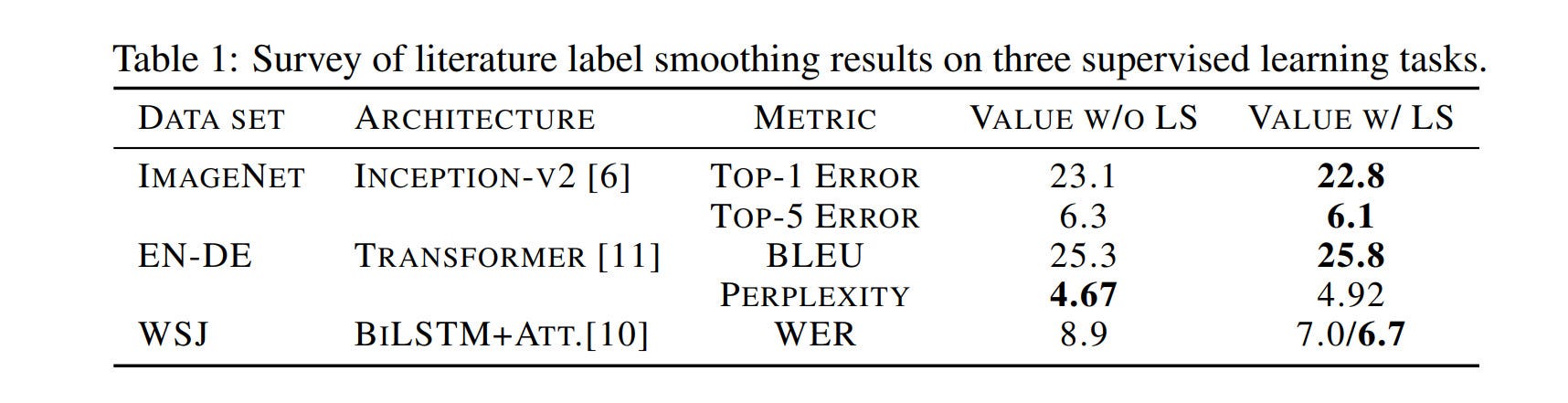

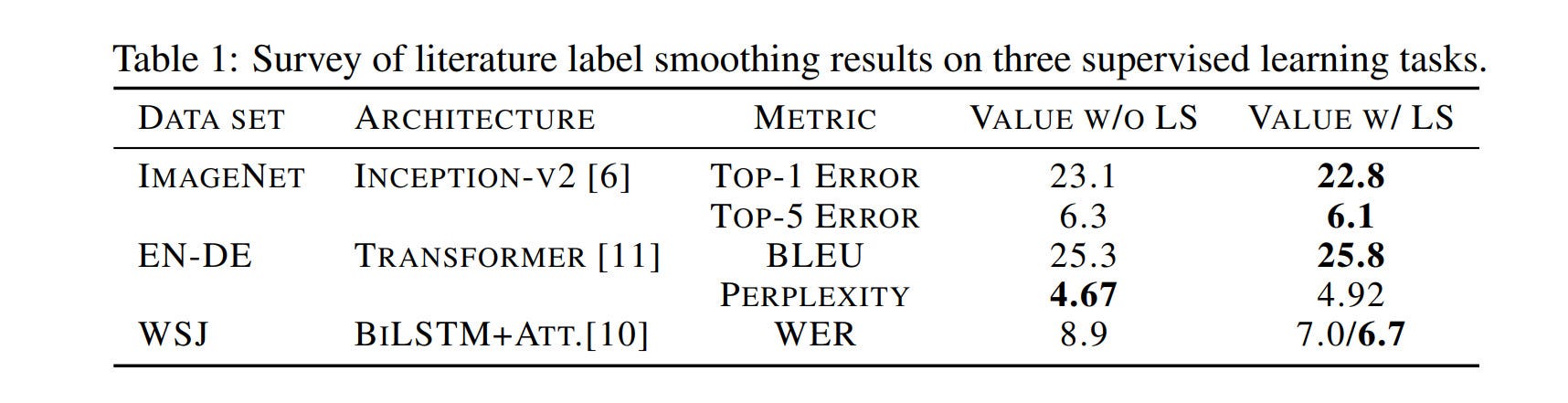

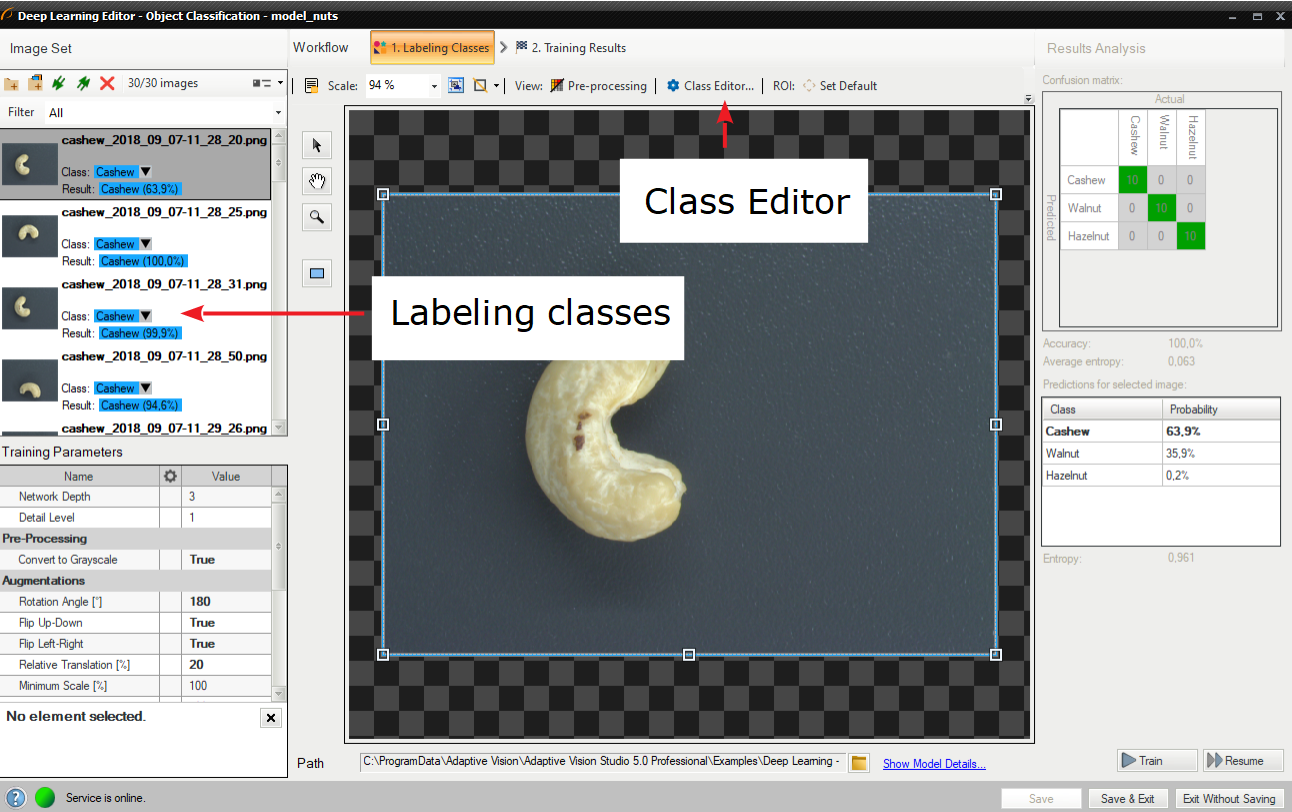

(PDF) Fruit recognition from images using deep learning Deep learning is a class of machine learning algorithms that use multi- ... across 131 labels. Each image contains a single fruit or vegetable. Separately, the … Validation of Soft Labels in Developing Deep Learning Algorithms for ... Validation of Soft Labels in Developing Deep Learning Algorithms for Detecting Lesions of Myopic Maculopathy From Optical Coherence Tomographic Images The predicted possibilities from the models trained by soft labels were close to the results made by myopia specialists. What is Label Smoothing? - Towards Data Science Label smoothing is used when the loss function is cross entropy, and the model applies the softmax function to the penultimate layer's logit vectors z to compute its output probabilities p. In this setting, the gradient of the cross entropy loss function with respect to the logits is simply ∇CE = p - y = softmax (z) - y Deep Learning from Noisy Image Labels with Quality Embedding Specially, it consists of two important layers: (1) the contrastive layer estimates the quality variable in the embedding space to reduce noise effect; (2) the additive layer aggregates prior predictions and noisy labels as posterior to train the classifier.

Label Smoothing & Deep Learning: Google Brain explains why it works and when to use (SOTA tips ...

Robust Training of Deep Neural Networks with Noisy Labels by Graph ... Recent developments in technology, such as crowdsourcing and web crawling, have made it easier to train machine learning models that require big data. However, the data collected by non-experts may contain noisy labels, and training a classification model on the data will result in poor generalization performance.

Soft-Label Guided Semi-Supervised Learning for Bi-Ventricle ... Deep convolutional neural networks have been applied to medical image segmentation tasks successfully in recent years by taking advantage of a large amount of training data with golden standard annotations. However, it is difficult and expensive to obtain good-quality annotations in practice. This work aims to propose a novel semi-supervised learning framework to improve the ventricle ...

【multi-label】Learning a Deep ConvNet for Multi-label Classification with Partial Labels_猫猫与橙子的博客 ...

Learning with not Enough Data Part 1: Semi-Supervised Learning Xie et al. (2020) applied self-training in deep learning and achieved great results. On the ImageNet classification task, ... One is to adopt MixUp with soft labels. Given two samples, $(\mathbf{x}_i, \mathbf{x}_j)$ and their corresponding true or pseudo labels $(y_i, y_j)$, the interpolated label equation can be translated to a cross entropy ...

(PDF) Ian Goodfellow, Yoshua Bengio, and Aaron Courville: Deep learning … 29.10.2017 · PDF | On Oct 29, 2017, Jeff Heaton published Ian Goodfellow, Yoshua Bengio, and Aaron Courville: Deep learning: The MIT Press, 2016, 800 pp, ISBN: 0262035618 | Find, read and cite all the research ...

A review of deep learning methods for semantic segmentation … 01.05.2021 · Deep Learning Methods for Semantic Segmentation of Remote Sensing Imagery. Inspired by the superior performance and explosion of new architectures of CNNs, great efforts have been devoted to transfer the success of deep learning methods to the segmentation of remote sensing data (Liu et al., 2018, Guo et al., 2018, Ajmal et al., 2018, Garcia-Garcia et …

How to Develop an Ensemble of Deep Learning Models in Keras 28.08.2020 · Deep learning neural network models are highly flexible nonlinear algorithms capable of learning a near infinite number of mapping functions. A frustration with this flexibility is the high variance in a final model. The same neural network model trained on the same dataset may find one of many different possible “good enough” solutions each time […]

[2007.05836] Meta Soft Label Generation for Noisy Labels The existence of noisy labels in the dataset causes significant performance degradation for deep neural networks (DNNs). To address this problem, we propose a Meta Soft Label Generation algorithm called MSLG, which can jointly generate soft labels using meta-learning techniques and learn DNN parameters in an end-to-end fashion.

Using Deep Learning for Image-Based Plant Disease Detection 22.09.2016 · Here, we demonstrate the technical feasibility using a deep learning approach utilizing 54,306 images of 14 crop species with 26 diseases (or healthy) made openly available through the project PlantVillage (Hughes and Salathé, 2015). An example of each crop—disease pair can be seen in Figure Figure1 1.

Label Smoothing: An ingredient of higher model accuracy Your labels would be 0 — cat, 1 — not cat. Now, say you label_smoothing = 0.2 Using the equation above, we get: new_onehot_labels = [0 1] * (1 — 0.2) + 0.2 / 2 = [0 1]* (0.8) + 0.1 new_onehot_labels = [0.9 0.1] These are soft labels, instead of hard labels, that is 0 and 1.

Label smoothing with Keras, TensorFlow, and Deep Learning This type of label assignment is called soft label assignment. Unlike hard label assignments where class labels are binary (i.e., positive for one class and a negative example for all other classes), soft label assignment allows: The positive class to have the largest probability While all other classes have a very small probability

Data Labeling Software: Best Tools For Data Labeling in 2021 Playment is a multi-featured data labeling platform that offers customized and secure workflows to build high-quality training datasets with ML-assisted tools and sophisticated project management software. It offers annotations for various use cases, such as image annotation, video annotation, and sensor fusion annotation.

How To Label Data For Semantic Segmentation Deep Learning Models? Labeling the data for computer vision is challenging, as there are multiple types of techniques used to train the algorithms that can learn from data sets and predict the results. Image annotation...

An Overview of Multi-Task Learning for Deep Learning 29.05.2017 · While many recent Deep Learning approaches have used multi-task learning -- either explicitly or implicitly -- as part of their model (prominent examples will be featured in the next section), they all employ the two approaches we introduced earlier, hard and soft parameter sharing. In contrast, only a few papers have looked at developing better mechanisms for MTL …

(PDF) A semi-supervised generative framework with deep learning features for high-resolution ...

Deep Learning with Label Noise / Noisy Labels - GitHub Deep Learning with Label Noise / Noisy Labels. This repo consists of collection of papers and repos on the topic of deep learning by noisy labels. All methods listed below are briefly explained in the paper Image Classification with Deep Learning in the Presence of Noisy Labels: A Survey. More information about the topic can also be found on ...

Semi-Supervised Learning With Label Propagation Label Propagation Algorithm. Label Propagation is a semi-supervised learning algorithm. The algorithm was proposed in the 2002 technical report by Xiaojin Zhu and Zoubin Ghahramani titled " Learning From Labeled And Unlabeled Data With Label Propagation .". The intuition for the algorithm is that a graph is created that connects all ...

Learning from Noisy Labels with Deep Neural Networks: A Survey Deep learning has achieved remarkable success in numerous domains with help from large amounts of big data. However, the quality of data labels is a concern because of the lack of high-quality ...

What is the definition of "soft label" and "hard label"? A soft label is one which has a score (probability or likelihood) attached to it. So the element is a member of the class in question with probability/likelihood score of eg 0.7; this implies that an element can be a member of multiple classes (presumably with different membership scores), which is usually not possible with hard labels.

Post a Comment for "38 soft labels deep learning"